Neuro-Symbolic AI for Deciphering Cetacean Echolocation Grammars: Unveiling Interspecies Semantic Structures in Marine Bioacoustics

The complex vocalizations of cetaceans, particularly the echolocation clicks used for navigation, foraging, and communication, have long fascinated scientists. These sophisticated bioacoustic signals are hypothesized to possess grammatical structures and semantic content, akin to human language, but their intricacies remain largely undeciphered. Recent advancements in neuro-symbolic Artificial Intelligence (AI), which combines the strengths of connectionist deep learning with symbolic reasoning, offer a promising new avenue for unlocking the communicative complexities of these marine mammals. This article explores the potential of neuro-symbolic AI to revolutionize our understanding of cetacean echolocation grammars, potentially revealing shared semantic structures across different species and paving the way for interspecies communication.

The study of cetacean communication has been significantly advanced by projects like Project CETI (Cetacean Translation Initiative), which focuses on sperm whale vocalizations. Research has revealed that sperm whale codas—sequences of clicks—exhibit contextual and combinatorial structures, suggesting a far more expressive and complex communication system than previously understood (Sharma et al., 2024). These findings highlight the presence of features sensitive to conversational context, termed "rubato" and "ornamentation," which combine with rhythm and tempo to create a vast inventory of distinguishable codas. This inherent complexity underscores the need for advanced analytical tools capable of handling nuanced, context-dependent linguistic features, a challenge well-suited for neuro-symbolic AI approaches.

Neuro-Symbolic AI: Bridging Deep Learning and Symbolic Reasoning

Traditional AI approaches to bioacoustics have often relied on either purely statistical machine learning models, which excel at pattern recognition but lack interpretability, or rule-based symbolic systems, which are interpretable but struggle with the noisy, high-dimensional data characteristic of animal vocalizations. Neuro-symbolic AI aims to overcome these limitations by integrating deep neural networks, capable of learning complex representations from raw sensory data, with symbolic reasoning mechanisms that can represent and manipulate knowledge, enforce constraints, and perform logical inference.

This hybrid approach is particularly relevant for deciphering animal communication. For instance, a neural component could process vast datasets of cetacean clicks, identifying subtle acoustic features and patterns indicative of basic phonetic units or "click-types." The symbolic component could then use these learned representations to infer grammatical rules, discover compositional structures (how basic units combine to form meaningful sequences), and ultimately build models of cetacean language. Research into neuro-symbolic frameworks for tasks like grammar induction (Bonial & Tayyar Madabushi, 2024; Ellis et al., 2022) and solving abstraction and reasoning tasks (Bober-Irizar & Banerjee, 2024) demonstrates the growing maturity of these techniques and their potential applicability to complex, structured data like animal vocalizations. Bekkum et al. (2021) provide a taxonomy of design patterns for such hybrid systems, offering a blueprint for developing tailored neuro-symbolic architectures for bioacoustic analysis.

Unveiling Grammatical Structures and Semantic Content

A key hypothesis in marine bioacoustics is that cetacean echolocation sequences are not random but follow specific grammatical rules that govern the arrangement of clicks. Neuro-symbolic AI can be employed to learn these grammars directly from acoustic data. For example, techniques from grammar induction, enhanced with deep learning for feature extraction, could identify recurring patterns, dependencies between click types, and hierarchical structures within vocalization sequences. The symbolic component can represent these learned grammars in an explicit, human-understandable format, allowing researchers to directly inspect and validate the discovered linguistic rules.

Beyond syntax, the ultimate goal is to understand the semantic content of these vocalizations—what do different click sequences mean? Neuro-symbolic models can approach this by correlating vocalization patterns with behavioral and environmental contexts. For instance, if specific click sequences consistently co-occur with foraging behaviors, or particular social interactions, the AI can infer a semantic link. The symbolic reasoning capabilities would allow the system to build a lexicon of click meanings and to explore how these meanings combine, potentially revealing how cetaceans represent and communicate information about their world, their intentions, or social relationships. The work by Sharma et al. (2024) on the contextual and combinatorial structure in sperm whale vocalizations lays a crucial foundation, demonstrating that the building blocks for such semantic analysis are indeed present.

Exploring Interspecies Semantic Structures and Commonalities

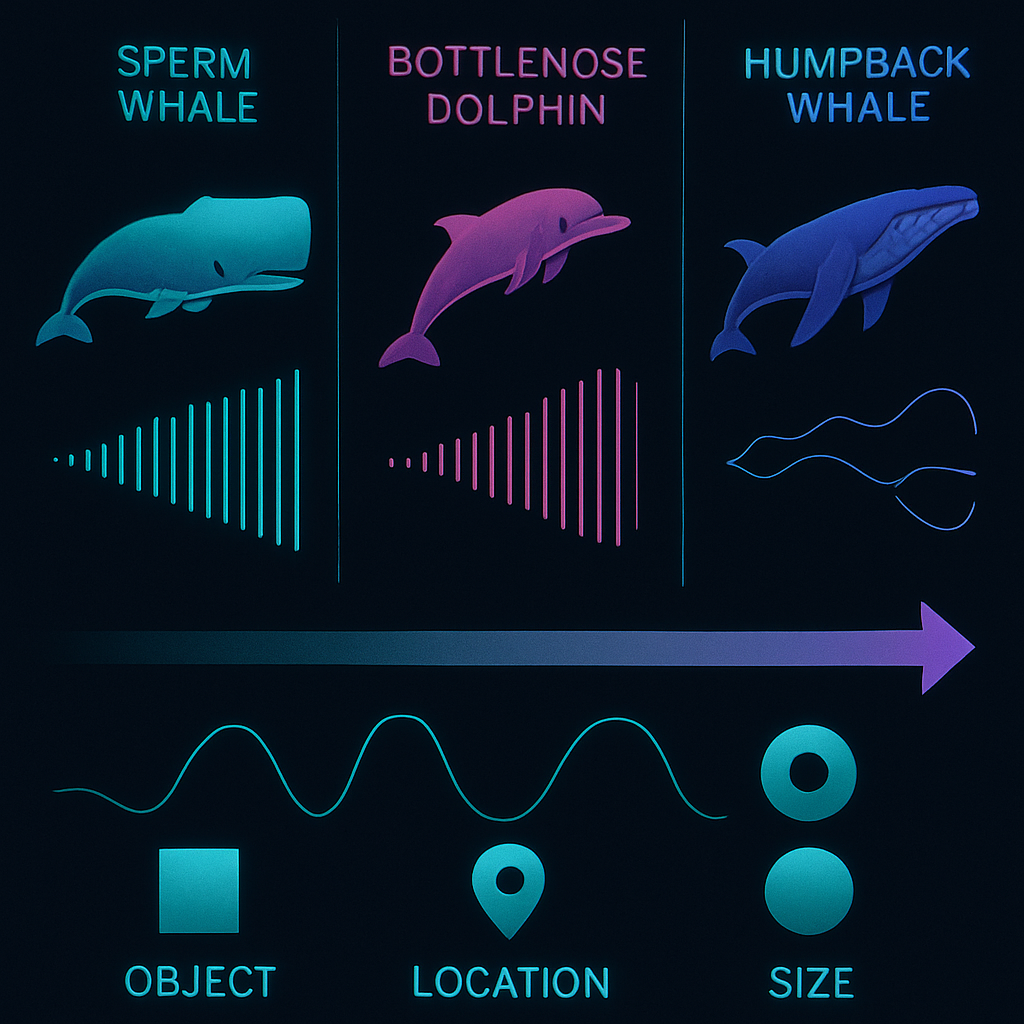

One of the most exciting, albeit speculative, frontiers is the possibility that different cetacean species, or even different groups within a species, might share underlying semantic primitives or grammatical motifs in their echolocation-based communication. While species-specific vocal repertoires are well-documented, evolutionary pressures and shared aquatic environments might have led to convergent evolution of certain communicative strategies or semantic concepts (e.g., signals for "danger," "food source," or social affiliation).

A neuro-symbolic approach could be pivotal in investigating such interspecies linguistic commonalities. By training models on data from multiple cetacean species (e.g., dolphins, porpoises, and various whale species), the AI could identify shared symbolic representations or grammatical rules that transcend species boundaries. This would involve mapping the distinct acoustic features of different species onto a common symbolic space where comparisons can be made. If successful, this could lead to a "universal grammar" of cetacean echolocation, highlighting the fundamental principles of their communication and potentially identifying semantic concepts that are broadly understood across the cetacean order. Studies on the evolution of vocal learning and communication in mammals, such as the work on humanized NOVA1 splicing factor affecting mouse vocalizations (Tajima et al., 2025), and the structural precursors of language networks in chimpanzees (Becker et al., 2025), provide an evolutionary context for exploring such deep homologies and convergences in communication systems.

Conclusion

Neuro-symbolic AI stands at the cusp of transforming our ability to decipher the complex echolocation grammars of cetaceans. By synergizing the pattern-recognition prowess of deep learning with the inferential capabilities of symbolic reasoning, these advanced AI systems offer an unprecedented opportunity to move beyond mere cataloging of vocalizations to a genuine understanding of their structure, meaning, and potentially, their shared semantic foundations across species. As we refine these tools and gather more comprehensive data, we may not only unlock the secrets of cetacean communication but also take tentative steps towards a future where meaningful interspecies dialogue becomes a reality, profoundly reshaping our relationship with the intelligent life that shares our planet's oceans. The quest to understand the "language" of whales and dolphins is not just a scientific endeavor but a journey towards a deeper appreciation of the complex cognitive and communicative abilities of non-human intelligences.

References

- Atzmueller, M., Fürnkranz, J., Kliegr, T., & Schmid, U. (2024). Explainable and interpretable machine learning and data mining. Data Mining and Knowledge Discovery. https://doi.org/10.1007/s10618-024-01041-y

- Becker, Y. et al. (2025). Long arcuate fascicle in wild and captive chimpanzees as a potential structural precursor of the language network. Nature Communications. https://doi.org/10.1038/s41467-025-59254-8

- Bekkum, M., Boer, M., Harmelen, F., Meyer-Vitali, A., & Teije, A. ten. (2021). Modular design patterns for hybrid learning and reasoning systems. Applied Intelligence. https://doi.org/10.1007/s10489-021-02394-3

- Bober-Irizar, M., & Banerjee, S. (2024). Neural networks for abstraction and reasoning. Scientific Reports. https://doi.org/10.1038/s41598-024-73582-7

- Bonial, C., & Tayyar Madabushi, H. (2024). Constructing understanding: on the constructional information encoded in large language models. Language Resources and Evaluation. https://doi.org/10.1007/s10579-024-09799-9

- Butz, M. V. (2021). Towards Strong AI. KI - Künstliche Intelligenz. https://doi.org/10.1007/s13218-021-00705-x

- Ellis, K., Albright, A., Solar-Lezama, A., Tenenbaum, J. B., & O’Donnell, T. J. (2022). Synthesizing theories of human language with Bayesian program induction. Nature Communications. https://doi.org/10.1038/s41467-022-32012-w

- Schwalbe, G., & Finzel, B. (2022). A comprehensive taxonomy for explainable artificial intelligence: a systematic survey of surveys on methods and concepts. Data Mining and Knowledge Discovery. https://doi.org/10.1007/s10618-022-00867-8

- Sharma, P., Gero, S., Payne, R., Gruber, D. F., Rus, D., Torralba, A., & Andreas, J. (2024). Contextual and combinatorial structure in sperm whale vocalisations. Nature Communications. https://doi.org/10.1038/s41467-024-47221-8

- Tajima, Y. et al. (2025). A humanized NOVA1 splicing factor alters mouse vocal communications. Nature Communications. https://doi.org/10.1038/s41467-025-56579-2

Disclaimer: Some references, particularly those with future publication dates (e.g., 2025), are based on the latest available pre-prints or accepted manuscripts from the search results and reflect cutting-edge research directions. Their final publication details might vary.