Computational Archaeolinguistics: Reconstructing Proto-Languages with AI Language Models

Archaeolinguistics, the study of the deep history of languages, strives to reconstruct proto-languages—the hypothetical ancestral tongues from which documented language families evolved. This endeavor has traditionally relied on the meticulous, labor-intensive comparative method, supplemented by lexicostatistics and more recently, computational phylogenetic approaches. However, the sheer scale of linguistic data, the complexities of language change, and the often-sparse evidence present formidable challenges. The advent of sophisticated Artificial Intelligence (AI), particularly Large Language Models (LLMs), offers a transformative new toolkit for historical linguists. This article explores the potential of AI to revolutionize proto-language reconstruction, moving beyond current computational methods to enable deeper, more nuanced, and data-rich inquiries into linguistic prehistory. We will examine how LLMs can enhance data analysis, generate novel hypotheses, and potentially simulate linguistic evolution, thereby ushering in a new era for computational archaeolinguistics.

The core challenge lies in identifying faint signals of common ancestry amidst millennia of divergence and change. While existing computational tools like those for visualizing relative chronologies of language changes (Wandl & Thelitz, 2024) are valuable, LLMs present a paradigm shift with their ability to process and find patterns in vast, unstructured datasets, potentially uncovering relationships that elude traditional methods. This exploration will speculate on how these AI capabilities might not only refine but also redefine our approach to uncovering the roots of human language.

Current Computational Horizons and the Analogical Leap from Biology

The application of computational methods to historical linguistics is not new. Phylogenetic models, extensively borrowed from evolutionary biology, have been instrumental in mapping language family trees (List et al., 2016). These approaches conceptualize languages as evolving entities, subject to processes analogous to biological speciation, drift, and horizontal transfer (borrowing). List et al. (2016) highlight how methods dealing with incomplete lineage sorting or sequence similarity networks could be adapted for linguistic analysis, offering frameworks to analyze mosaic word distributions or identify borrowed versus inherited lexicon. The study of how specific vocabulary sets, like those for rice cultivation, can indicate independent domestications or cultural spread (Sagart, 2011) further showcases how linguistic data informs prehistory.

However, these methods often rely on pre-processed, structured datasets of cognates and assumed sound laws. The identification of these fundamental units remains a significant bottleneck, often requiring expert intuition. Furthermore, modeling complex, non-tree-like evolutionary processes (e.g., extensive borrowing, creolization) remains challenging. While the development of computational linguistics has provided tools like probabilistic language models and deep learning for various tasks (Alduais et al., 2025), their specific application to the nuanced task of reconstructing deep ancestral languages is still nascent. The challenge is to move from analyzing contemporary or recent historical languages to inferring forms and structures that may have existed many millennia ago, for which direct evidence is entirely absent.

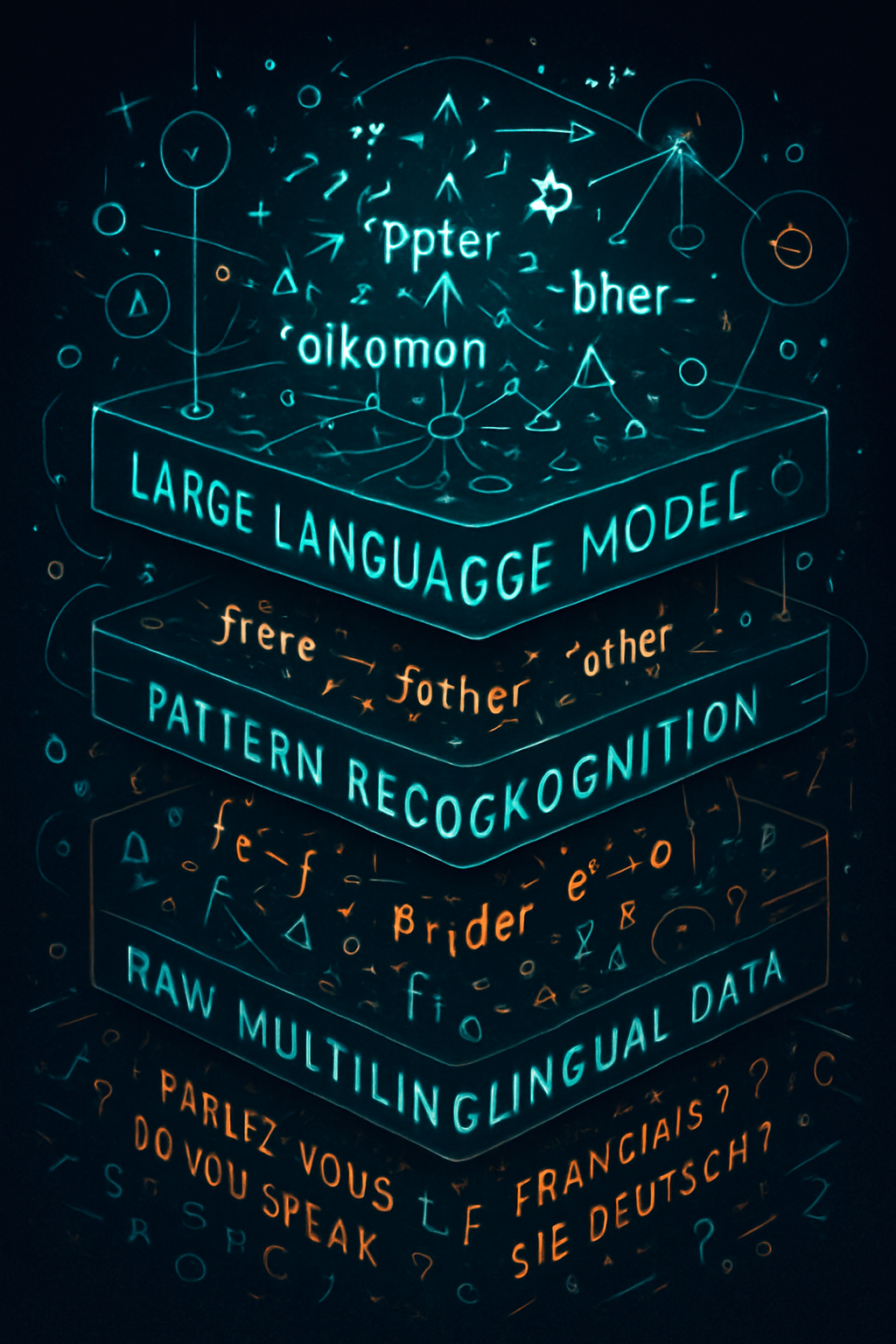

Large Language Models: Pattern Recognition and Hypothesis Generation at Scale

Large Language Models, with their sophisticated pattern recognition capabilities trained on massive textual corpora, offer new avenues for proto-language reconstruction. Their potential extends beyond mere data processing; they can assist in the core intellectual tasks of identifying cognates, proposing sound correspondences, and even generating plausible proto-forms. Imagine an LLM trained on lexical and grammatical data from all known Indo-European languages. It could potentially identify subtle, previously unnoticed cognate sets by learning complex, non-linear relationships between phonological forms and semantic fields across hundreds of languages. This moves beyond simple string similarity, incorporating learned "understanding" of semantic drift and phonological transformations.

Furthermore, LLMs could be used to generate hypotheses about sound laws. By analyzing patterns of correspondence between attested daughter languages, an LLM might propose multiple competing sound change rules, along with likelihood scores, that could explain the observed diversity. This could also extend to morphology. Smith (2023) discusses modeling sound change as constraint reranking in Diachronic Optimality Theory; LLMs could potentially learn such constraints and their historical shifts directly from data, offering a new way to formalize and test theories of phonological and morphological evolution. The ability of LLMs to handle and generate diverse outputs could also be harnessed to reconstruct not just single lexical items, but aspects of proto-grammars, inferring ancestral case systems or verbal conjugations based on their varied reflexes in daughter languages. This could be particularly powerful for exploring "linguistic dark matter" – features of proto-languages that have left only very faint or indirect traces.

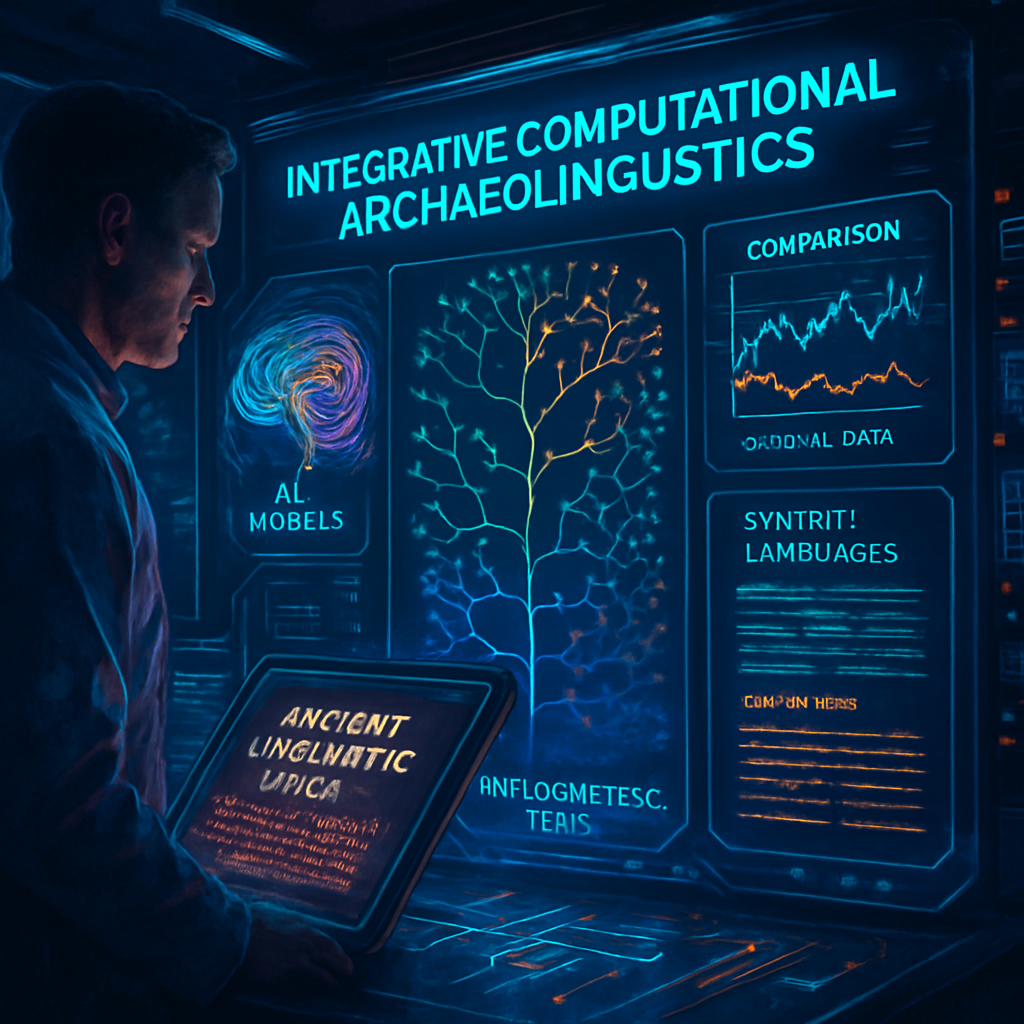

Integrative Synthesis: AI for Phylogenetic Inference, Knowledge Graphs, and Simulated Evolution

The true power of AI in archaeolinguistics may lie in its ability to integrate diverse types of evidence and analytical approaches. LLMs could serve as the engine for more sophisticated phylogenetic models. Instead of relying on a few dozen or hundred lexical items, AI could analyze thousands of features—phonological, morphological, syntactic, and lexical—to infer language relationships, potentially resolving long-standing debates about language family structures. These models could explicitly incorporate uncertainty and model complex scenarios of borrowing and convergence, moving beyond traditional tree-like representations when necessary, perhaps leading to more nuanced network models similar to those discussed by Fuchs et al. (2025) for biological networks arising from tree decorations.

Inspired by the creation of knowledge graphs from textual data (Liu et al., 2025; Zhu et al., 2025), computational archaeolinguistics could leverage LLMs to construct vast, interconnected networks of etymological data, proposed sound laws, reconstructed forms, and even links to archaeological or genetic data. Such a "Proto-Language Knowledge Graph" could be queried to explore complex hypotheses, such as correlating the spread of a particular linguistic feature with known human migrations or cultural innovations. Moreover, a highly speculative but exciting frontier is the use of LLMs for simulated language evolution. Given a set of hypothesized proto-forms and generative rules (e.g., sound laws, grammatical changes learned by the AI), an LLM could attempt to "evolve" synthetic daughter languages. The similarity of these synthetic languages to actual attested languages could then serve as a measure of the plausibility of the initial proto-language reconstruction and the evolutionary rules, creating a powerful iterative method for hypothesis testing.

Conclusion

The integration of AI, particularly advanced language models, into archaeolinguistics promises a revolution in our ability to reconstruct proto-languages and understand linguistic prehistory. By automating aspects of data analysis, identifying complex patterns invisible to human researchers, generating novel hypotheses, and enabling new forms of integrative modeling and simulation, AI can significantly augment the historical linguist's toolkit. This could lead to more robust reconstructions, a deeper understanding of the mechanisms of language change, and a richer picture of how language families diversified and spread.

However, significant challenges remain. The "black box" nature of some AI models requires careful validation and interpretation by linguistic experts. Biases in training data can lead to skewed or inaccurate inferences. The development of AI tools must be guided by linguistic theory to ensure that they are asking and answering meaningful questions. Ethical considerations regarding the ownership and interpretation of linguistic heritage, especially concerning Indigenous languages (Pinotti et al., 2025; Dexter-Sobkowiak, 2023), must also be paramount.

The future of computational archaeolinguistics will likely involve a symbiotic relationship between human expertise and artificial intelligence. Linguists will define the research questions, curate the data, and critically evaluate AI-generated outputs, while AI will provide the computational power to explore vast datasets and complex models. This collaboration holds the potential to unlock new insights into the origins and evolution of human language, pushing the boundaries of what we thought possible in peering into our distant linguistic past. The journey to reconstruct proto-languages is far from over, but with AI as a new compass, we may navigate these uncharted waters with greater confidence and discover shores previously hidden from view.

References

- Alduais, A., Yassin, A. A., & Allegretta, S. (2025). Computational linguistics: a scientometric review. Quality & Quantity. https://doi.org/10.1007/s11135-025-02138-2

- Allentoft, M. E., Sikora, M., Fischer, A., Sjögren, K.-G., Ingason, A., Macleod, R., ... & Willerslev, E. (2023). 100 ancient genomes show repeated population turnovers in Neolithic Denmark. Nature. https://doi.org/10.1038/s41586-023-06862-3

- Dexter-Sobkowiak, E. (2023). “¡Amo kitlapanas tetl!”: Heritage Language and the Defense Against Fracking in the Huasteca Potosina, Mexico. In: Living with Nature, Cherishing Language. https://doi.org/10.1007/978-3-031-38739-5_8

- Fuchs, M., Steel, M., & Zhang, Q. (2025). Asymptotic Enumeration of Normal and Hybridization Networks via Tree Decoration. Bulletin of Mathematical Biology. https://doi.org/10.1007/s11538-025-01444-y

- List, J.-M., Pathmanathan, J. S., Lopez, P., & Bapteste, E. (2016). Unity and disunity in evolutionary sciences: process-based analogies open common research avenues for biology and linguistics. Biology Direct. https://doi.org/10.1186/s13062-016-0145-2

- Liu, J., Liu, Z., Shen, Y., Zhang, R., Song, N., Liu, J., & Pei, L. (2025). Multi-dimensional intelligent reorganization and utilization of knowledge in ‘Biographies of Chinese Thinkers’. npj Heritage Science. https://doi.org/10.1038/s40494-025-01669-z

- Pinotti, T., Adler, M. A., Mermejo, R., Bitz-Thorsen, J., McColl, H., Scorrano, G., ... & Willerslev, E. (2025). Picuris Pueblo oral history and genomics reveal continuity in US Southwest. Nature. https://doi.org/10.1038/s41586-025-08791-9

- Sagart, L. (2011). How Many Independent Rice Vocabularies in Asia? Rice. https://doi.org/10.1007/s12284-011-9077-8

- Smith, A. D. (2023). Constraint reranking in diachronic OT: binary-feet and word-minimum phenomena in Austronesian. Journal of East Asian Linguistics. https://doi.org/10.1007/s10831-023-09261-x

- Wandl, F., & Thelitz, T. H. K. (2024). RelChronVis: an interactive web application for visualizing the relative chronology of language changes. International Journal of Digital Humanities. https://doi.org/10.1007/s42803-024-00086-1

- Zhu, J., Fu, Y., Zhou, J., & Chen, D. (2025). A Temporal Knowledge Graph Generation Dataset Supervised Distantly by Large Language Models. Scientific Data. https://doi.org/10.1038/s41597-025-05062-0