Biophotonic Sonification of Cellular Automata: Translating Microscopic Biological Dynamics into Audible Artistic Representations

The intricate dance of life at the cellular level, characterized by ceaseless activity and complex interactions, generates a wealth of dynamic data. Modern biophotonic techniques allow us to peer into this microscopic world with unprecedented detail, capturing signals related to morphology, signaling, and metabolic states. Simultaneously, cellular automata (CA) offer a powerful computational framework for modeling complex systems that emerge from simple local rules, often mirroring patterns seen in nature. Sonification, the translation of data into non-speech sound, presents an alternative modality for interpreting complex datasets and holds potential for artistic expression.

This article proposes a novel interdisciplinary approach: the biophotonic sonification of cellular automata, a method to transform real-time microscopic biological dynamics, observed via biophotonics, into evolving CA models whose patterns are then translated into audible, potentially artistic, representations. This synthesis aims to unlock new avenues for scientific discovery, intuitive data understanding, and innovative bio-artistic creation.

Capturing Cellular Whispers: Biophotonics as a Data Source

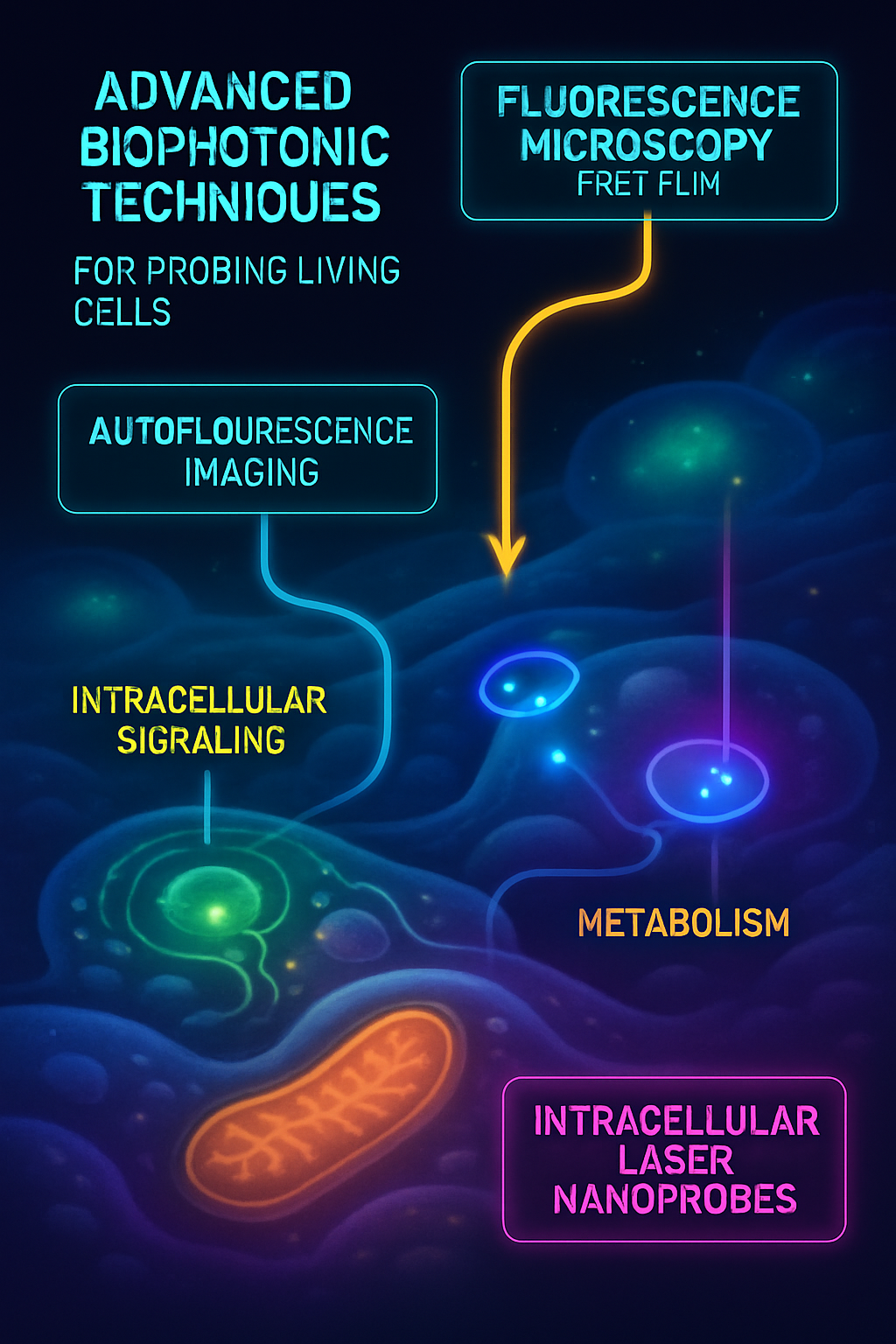

Biophotonics provides a diverse toolkit for observing and quantifying cellular dynamics in living systems. Techniques such as fluorescence microscopy, including advanced methods like FRET (Förster Resonance Energy Transfer), FLIM (Fluorescence Lifetime Imaging Microscopy), and super-resolution imaging, can track molecular interactions, protein conformational changes, and subcellular structures with high specificity (Peng et al., 2024). For example, genetically encoded calcium indicators convert intracellular calcium fluxes—critical for cell signaling—into light signals. Autofluorescence from intrinsic fluorophores like NAD(P)H can serve as a label-free indicator of cellular metabolic states. Furthermore, intracellular laser particles and other nanoprobes are emerging as tools for sensing biomechanical forces and chemical changes within cells (Schubert, 2025). These methods generate rich, multi-dimensional datasets—photon counts fluctuating over time, spatial reorganizations of labeled components, spectral shifts, and changes in optical properties—all reflecting the underlying biological activity. The key proposition here is to harness these dynamic biophotonic signals not just for visual analysis, but as direct inputs for computational models.

Cellular Automata: A Generative Bridge for Biological Complexity

Cellular automata are discrete dynamical systems where a grid of cells, each in a specific state, evolves based on a set of local rules that consider the states of neighboring cells. Despite their simplicity, CA can generate highly complex, self-organizing patterns reminiscent of natural phenomena, including biological processes like tissue growth, neural network activity, and pattern formation. The core idea proposed is to use real-time or time-series biophotonic data to inform the states or update rules of a CA. For instance, the intensity of a biophotonic signal from a specific cellular region could determine the initial state of a CA cell or group of cells, or it could modulate the probability of a rule application within the CA. As the biological system evolves and its biophotonic signature changes, the CA coupled to it would also change, creating a dynamic, bio-driven computational model. This turns the CA into a generative engine, translating the continuous, and often noisy, analog data from biophotonics into discrete, evolving patterns that can then be further processed or interpreted (Wolfram, 1983).

Translating Patterns to Sound: Sonification Strategies for CA Dynamics

Once the biophotonically-driven CA generates evolving patterns, sonification techniques can map these patterns into the auditory domain. Sonification strategies can range from direct parameter mapping (e.g., the state of a CA cell to a specific pitch or timbre, the density of active cells to loudness) to more complex mappings where emergent CA structures (like gliders, oscillators, or chaotic domains in Conway's Game of Life) trigger distinct musical motifs, textures, or rhythmic events. The spatial arrangement of CA cells can be mapped to stereo panning or other spatial audio parameters, providing an auditory sense of the system's geometry. For example, Toharia et al. (2013) demonstrated the sonification of dendritic spine morphology, revealing anatomical subtleties through sound. Extending this, the sonification of the dynamics of a CA, which itself mirrors biophotonic changes, could provide intuitive insights into temporal processes, such as the propagation of a signaling wave across a cell population represented by CA activity, or the rhythm of metabolic oscillations made audible. The challenge and art lie in creating mappings that are both informative and perceptually salient.

The Emergence of Bio-Art: Aesthetic and Perceptual Dimensions

The biophotonic sonification of cellular automata transcends mere data representation; it opens a pathway for novel forms of bio-art. By translating the normally invisible and silent processes of cellular life into soundscapes, we can create powerful aesthetic experiences. These soundscapes would not be arbitrary but would be intrinsically linked to the very fabric of biological activity, filtered and re-patterned through the lens of cellular automata. Such artistic representations could foster a deeper, more intuitive connection with the microscopic world, making complex biological phenomena accessible and engaging to a wider audience. This approach could also serve as an exploratory tool, where unexpected auditory patterns highlight subtle dynamics in the biophotonic data or the CA model that might be missed by visual inspection alone, akin to how algorithmic composition tools are evolving (Colafiglio et al., 2024). This interdisciplinary fusion could lead to interactive installations where audiences can "hear" cellular responses to stimuli in real-time, fostering new dialogues between science, art, and the public.

Challenges and Future Horizons

The proposed synthesis faces several challenges. Technically, real-time acquisition and processing of biophotonic data to drive CA, followed by immediate sonification, requires significant computational resources and sophisticated algorithms. Designing meaningful and aesthetically pleasing sonification mappings that accurately reflect complex multi-dimensional data is a non-trivial task. Furthermore, validating the scientific insights gained through such sonifications will be crucial.

Looking ahead, future developments could incorporate machine learning to optimize sonification parameters or to identify salient biophotonic features for CA input. Interactive systems allowing users to manipulate CA rules or biophotonic inputs and hear the resulting changes could become powerful research and educational tools. Integration with virtual or augmented reality could offer immersive experiences of these cellular soundscapes. Ultimately, the biophotonic sonification of cellular automata offers a rich, speculative domain for exploring the intersection of biology, computation, sound, and art.

Conclusion

The fusion of biophotonics, cellular automata, and sonification offers a novel and exciting paradigm for exploring and representing microscopic biological dynamics. By using biophotonic signals to drive cellular automata and then translating the CA's emergent behavior into sound, we can create a unique bridge between the invisible world of cellular life and human auditory perception. This approach not only holds promise for enhancing scientific understanding by revealing hidden patterns and temporal relationships in complex biological data but also opens up new frontiers for bio-artistic expression, allowing us to listen to the intricate symphonies played out within living cells. Further exploration of this interdisciplinary field could lead to innovative tools for research, education, and artistic creation, fostering a deeper appreciation for the complexity and beauty of life at its most fundamental level.

References

- Colafiglio, T., Ardito, C., Sorino, P., Lofù, D., Festa, F., Di Noia, T., & Di Sciascio, E. (2024). NeuralPMG: A Neural Polyphonic Music Generation System Based on Machine Learning Algorithms. Cognitive Computation. https://doi.org/10.1007/s12559-024-10280-6

- Peng, C. S., Zhang, Y., Liu, Q., Marti, G. E., Huang, Y. A., Südhof, T. C., Cui, B., & Chu, S. (2024). Nanometer-resolution tracking of single cargo reveals dynein motor mechanisms. Nature Chemical Biology. https://doi.org/10.1038/s41589-024-01694-2

- Schubert, M. (2025). Biologische und biomedizinische Anwendungen intrazellularer Laserpartikel. BIOspektrum. https://doi.org/10.1007/s12268-025-2463-3

- Toharia, P., Morales, J., Juan, O., Fernaud, I., Rodríguez, A., & DeFelipe, J. (2013). Musical Representation of Dendritic Spine Distribution: A New Exploratory Tool. Neuroinformatics, 11(4), 463–475. https://doi.org/10.1007/s12021-013-9195-0

- Wolfram, S. (1983). Statistical mechanics of cellular automata. Reviews of Modern Physics, 55(3), 601–644. https://doi.org/10.1103/RevModPhys.55.601